Agent-FLAN: Designing Data and Methods of Effective Agent Tuning for Large Language Models

Abstract

Open-sourced Large Language Models (LLMs) have achieved great success in various NLP tasks, however, they are still far inferior to API-based models when acting as agents. How to integrate agent ability into general LLMs becomes a crucial and urgent problem. This paper first delivers three key observations: (1) the current agent training corpus is entangled with both formats following and agent reasoning, which significantly shifts from the distribution of its pre-training data; (2) LLMs exhibit different learning speeds on the capabilities required by agent tasks; and (3) current approaches have side-effects when improving agent abilities by introducing hallucinations. Based on the above findings, we propose Agent-FLAN to effectively Fine-tune LANguage models for Agents. Through careful decomposition and redesign of the training corpus, Agent-FLAN enables Llama2-7B to outperform prior best works by 3.5% across various agent evaluation datasets. With comprehensively constructed negative samples, Agent-FLAN greatly alleviates the hallucination issues based on our established evaluation benchmark. Besides, it consistently improves the agent capability of LLMs when scaling model sizes while slightly enhancing the general capability of LLMs.

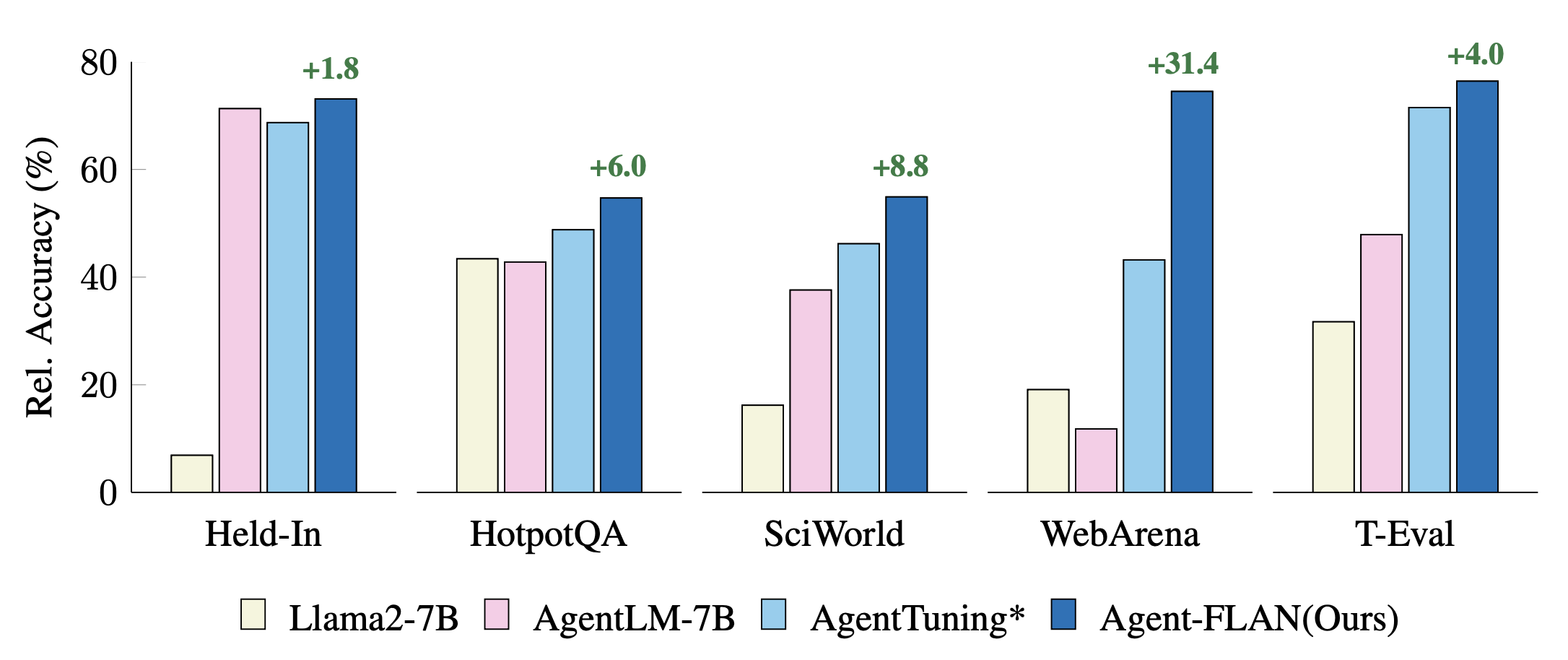

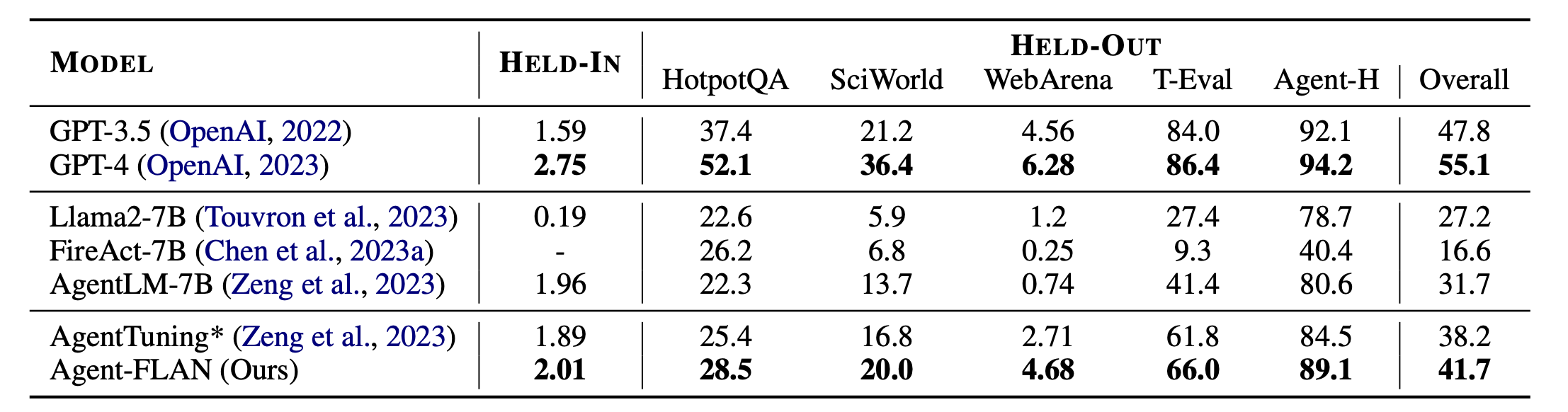

Detailed Performance

Agent-FLAN significantly outperforms previous agent-tuning approaches by a large margin on both held-in and held-out tasks. * denotes our re-implementation with the same amount of training data for a fair comparison. Since FireAct does not train on AgentInstruct dataset, we omit its performance on the HELD-IN set. Bold: the best in API-based and open-sourced models.

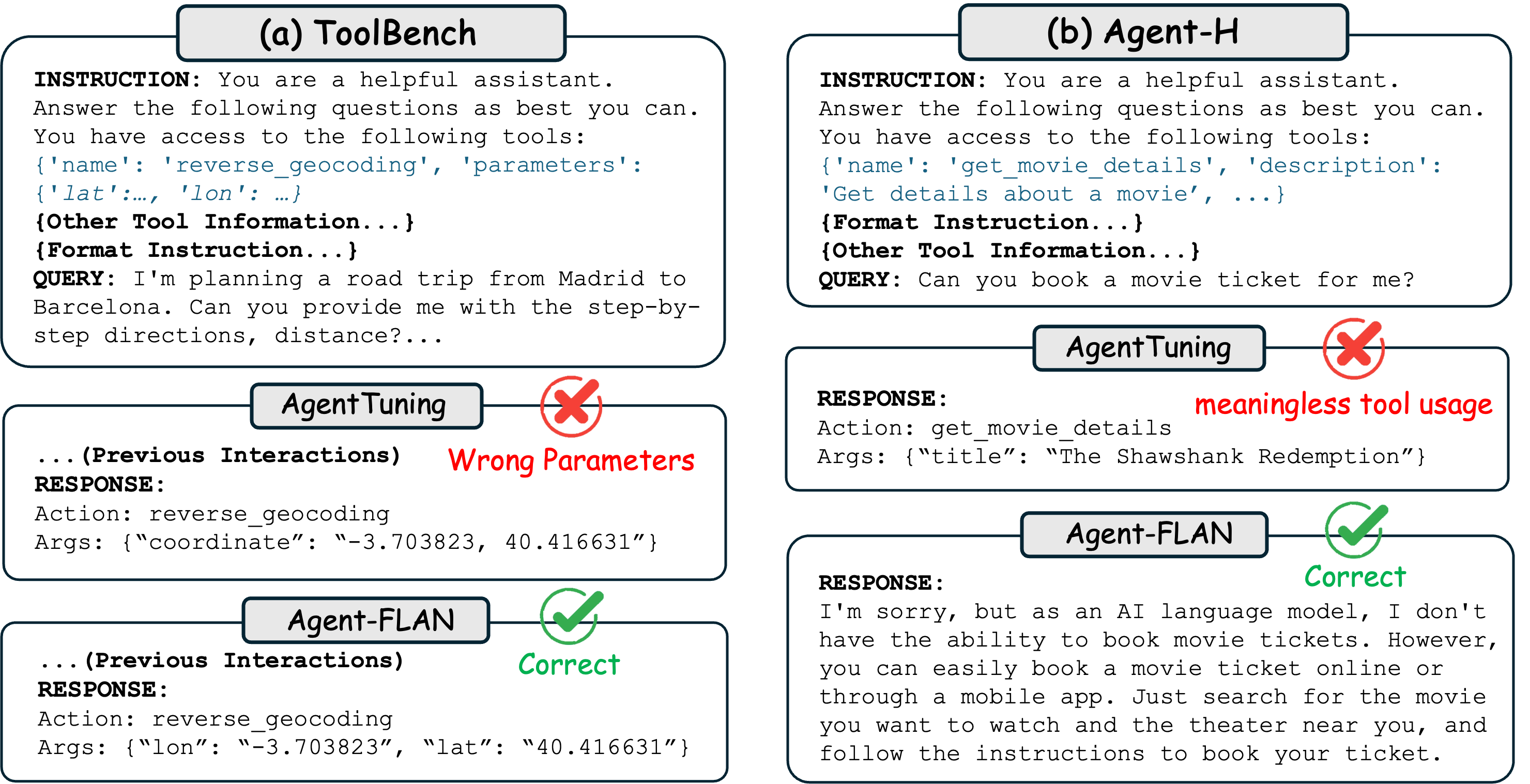

Case Study

Comparison studies on Toolbench and Agent-H datasets between AgentTuning and Agent-FLAN with Llama2-7B. (a) ToolBench: Thanks to the capability decomposition and more focus tuning on ‘understand’, Agent-FLAN is able to catch up with the specific API information given long tool information content, whereas AgentTuning failed with hallucination. (b) Agent-H: the AgentTuning model presents a meaningless tool usage while Agent-FLAN directly gives the preferred response.

This webpage template was recycled from here.

Citation

@article{chen2024agent,

title={Agent-FLAN: Designing Data and Methods of Effective Agent Tuning for Large Language Models},

author={Chen, Zehui and Liu, Kuikun and Wang, Qiuchen and Zhang, Wenwei and Liu, Jiangning and Lin, Dahua and Chen, Kai and Zhao, Feng},

journal={arXiv preprint arXiv:2403.12881},

year={2024}

}